How to Analyze 360 Feedback Results and Interpret Reviewer Group Differences

360-degree feedback provides a unique opportunity to evaluate how various individuals rate an employee’s performance. It empowers employees to identify strengths and areas for improvement; serves as a catalyst for change; and helps with team-building, career development, and nurturing leaders.

However, because 360 feedback results come from multiple sources or “reviewer groups,” they can often be confusing, inconsistent, and difficult to interpret—at least without the right tips for interpretation. Here’s what you need to know about analyzing your 360 feedback results and interpreting reviewer group differences:

Collecting 360-Degree Feedback from Multiple Perspectives

360-degree feedback incorporates multiple perspectives, including one’s supervisor, peers, direct reports, and even the employee themselves. Aggregating 360 feedback provides a complete picture of performance, helping to minimize unconscious bias and provide more well-rounded results for each individual. Plus, multi-rater feedback is more comprehensive and actionable in nature.

With that said, different reviewer groups perceive performance and behavior differently, which often results in different feedback from various groups. Different reviewer groups tend to focus on core competencies that are most important to them and/or those they have had the most opportunity to observe directly.

For example, managers typically emphasize technical competence and bottom-line results. On the other hand, peers often prioritize collaboration, influence, and interpersonal factors, whereas direct reports tend to focus on coaching, communication, leadership, and managerial skills when reviewing their supervisors.

Interpreting 360 Feedback Results and Reviewer Group Differences

Despite potential discrepancies across feedback from multiple reviewer groups, 360-degree assessments can still be an incredibly valuable tool that you can make the most of with the right approach. In fact, in many cases, discrepancies can actually be what makes 360 feedback so valuable, offering unique perspectives that can help employers draw conclusions about strengths and opportunities for improvement.

Making sense of equally valid yet differing 360-degree feedback requires a degree of interpretation. To effectively analyze 360 feedback results:

Look for trends across feedback.

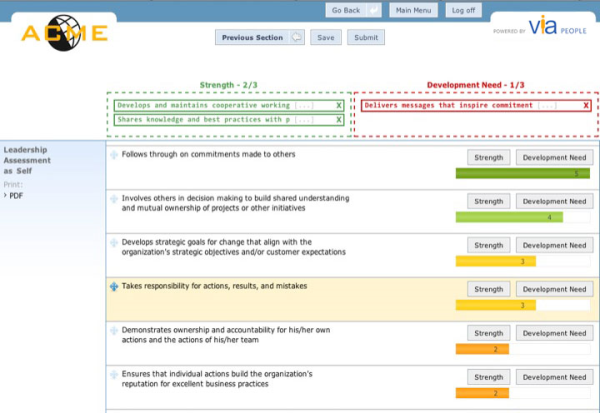

Start at a high level and then drill down into the details, looking for similarities in competency averages. From there, you can look for consistencies in perceived strengths and development needs, pinpointing the highest- and lowest-rated behaviors and how your 360 feedback results relate to each other. See if there are any competencies or behaviors that all reviewers seem to agree are strengths because these are areas you can utilize on the job and in your ongoing development efforts.

Consider reviewer group differences.

Next, look for big differences or inconsistencies in 360 feedback results, and consider why they exist. Which competencies and behaviors do reviewers disagree on? These are areas that likely warrant further thinking, exploration, and discussion with your manager/peers.

For instance, you may discover differences among feedback from your peers or direct reports. Don’t be alarmed—this actually presents you with the opportunity to dig deeper and ask questions to investigate further, such as:

- Does your behavior actually differ when interacting with various reviewer groups? Can you think of specific examples or scenarios?

- What specific opportunities have the reviewers had to observe your behaviors?

- Who can assist you—whether it is your manager, trainer, trusted colleague, or another fellow employee—in gaining further clarity?

It’s also a good idea to analyze employee self-ratings in addition to other 360 feedback results. Self-ratings in 360 feedback provide insights into self-awareness and highlight the potential for future leadership derailment. To effectively evaluate employee self-ratings, identify whether there are any outliers that are consistently higher or lower than the ratings shared by others.

Above all, keep in mind that the goal isn’t to force feedback into specific categories or neat little columns of strengths and development needs. Instead, focus on taking action based on the 360 feedback results that are most critical for both your organizational and employee development—whether the feedback came from one source or many.

Analyzing and Interpreting 360 Feedback Results with viaPeople

Struggling to make sense of multi-rater feedback? viaPeople’s 360-degree feedback solution makes it easy to identify reviewer group differences, draw conclusions about strengths and opportunities for improvement, and measure performance and take action based on your results. Request a demo for a firsthand look.

Share this

You May Also Like

These Related Stories

Product Feature Spotlight - 360 Feedback Focus Areas

The 360 Degree Feedback Tug of War